神经网络基础 层的定义 1 2 3 4 5 6 7 8 9 import torchfrom torch import nnfrom torch.nn import functional as Fnet = nn.Sequential(nn.Linear(20 ,256 ), nn.ReLU(), nn.Linear(256 , 10 )) X = torch.rand(2 , 20 ) net(X)

tensor([[-0.0226, 0.1110, 0.1338, 0.0594, 0.0579, 0.0372, 0.2026, -0.2140,

0.0259, 0.0358],

[-0.1102, 0.0507, 0.0410, 0.1030, 0.1872, 0.0963, 0.1452, -0.1649,

-0.0152, 0.1379]], grad_fn=<AddmmBackward0>)

nn.Sequential定义了一个特殊的Moudule,用法看下面

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 from turtle import forwardclass MLP (nn.Module): def __init__ (self ): super ().__init__() self.hidden = nn.Linear(20 , 256 ) self.out = nn.Linear(256 , 10 ) def forward (self, X ): return self.out(F.relu(self.hidden(X))) net = MLP() net(X)

tensor([[-0.0278, 0.0588, 0.1944, -0.1413, 0.0069, -0.0176, 0.0903, 0.1522,

0.1853, -0.0288],

[-0.0594, -0.0154, 0.1833, -0.1290, 0.0523, -0.0802, 0.1188, -0.0268,

0.1307, -0.0880]], grad_fn=<AddmmBackward0>)

下面这个类将实现和Sequential几乎一样的功能

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 class MySequential (nn.Module): def __init__ (self, *args ): super ().__init__() for block in args: self._modules[block] = block def forward (self, X ): for block in self._modules.values(): X = block(X) return X net = MySequential(nn.Linear(20 , 256 ), nn.ReLU(), nn.Linear(256 , 10 )) net(X)

tensor([[ 0.2277, -0.0270, -0.0845, 0.1933, 0.1448, -0.0288, 0.1228, 0.2044,

0.1765, -0.0015],

[ 0.2065, 0.0460, -0.1169, 0.1954, 0.0135, -0.1282, 0.1200, 0.3168,

0.1600, -0.0043]], grad_fn=<AddmmBackward0>)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 from re import Tclass FixedHiddenMLP (nn.Module): def __init__ (self ): super ().__init__() self.rand_weight = torch.rand((20 , 20 ), requires_grad=False ) self.Linear = nn.Linear(20 , 20 ) def forward (self, X ): X = self.Linear(X) X = F.relu(torch.mm(X, self.rand_weight)+1 ) X = self.Linear(X) while X.abs ().sum () > 1 : X /= 2 return X.sum () net = FixedHiddenMLP() net(X)

tensor(0.1070, grad_fn=<SumBackward0>)

可以灵活的嵌套使用

1 2 3 4 5 6 7 8 9 10 11 12 13 class NestMLP (nn.Module): def __init__ (self ): super ().__init__() self.net = nn.Sequential(nn.Linear(20 , 64 ), nn.ReLU(), nn.Linear(64 , 32 ), nn.ReLU()) self.linear = nn.Linear(32 , 16 ) def forward (self, X ): return self.linear(self.net(X)) chimera = nn.Sequential(NestMLP(), nn.Linear(16 , 20 ), FixedHiddenMLP()) chimera(X)

tensor(-0.2101, grad_fn=<SumBackward0>)

参数管理 1 2 3 4 5 6 7 8 import torchfrom torch import nnnet = nn.Sequential(nn.Linear(4 , 8 ), nn.ReLU(), nn.Linear(8 , 1 )) X = torch.rand(size=(2 , 4 )) net(X)

tensor([[-0.1461],

[-0.0553]], grad_fn=<AddmmBackward0>)

1 2 3 4 print (net[2 ].state_dict())

OrderedDict([('weight', tensor([[-0.0300, 0.1777, -0.0958, -0.2357, -0.3395, 0.2508, 0.2884, -0.1535]])), ('bias', tensor([-0.2819]))])

1 2 3 print (type (net[2 ].bias))print (net[2 ].bias) print (net[2 ].bias.data)

<class 'torch.nn.parameter.Parameter'>

Parameter containing:

tensor([-0.2819], requires_grad=True)

tensor([-0.2819])

1 print (net[2 ].weight.grad)

None

1 2 3 4 5 6 from unicodedata import nameprint (*[(name, param.shape) for name, param in net[0 ].named_parameters()])print (*[(param, param.shape)for name, param in net.named_parameters()])

('weight', torch.Size([8, 4])) ('bias', torch.Size([8]))

(Parameter containing:

tensor([[-0.4920, -0.1636, 0.0745, 0.2927],

[-0.2327, 0.0116, 0.1465, -0.1842],

[ 0.2754, -0.2012, -0.2815, 0.3537],

[-0.4651, 0.2001, -0.2492, -0.2959],

[ 0.2676, -0.1646, -0.3039, 0.3171],

[-0.1426, -0.2941, -0.4617, -0.2725],

[ 0.1695, 0.2115, 0.4533, -0.0576],

[-0.4780, -0.0624, -0.0300, -0.1215]], requires_grad=True), torch.Size([8, 4])) (Parameter containing:

tensor([-0.0066, -0.1765, 0.1123, -0.0507, -0.1850, -0.3662, 0.1150, 0.3825],

requires_grad=True), torch.Size([8])) (Parameter containing:

tensor([[-0.0300, 0.1777, -0.0958, -0.2357, -0.3395, 0.2508, 0.2884, -0.1535]],

requires_grad=True), torch.Size([1, 8])) (Parameter containing:

tensor([-0.2819], requires_grad=True), torch.Size([1]))

1 2 3 4 5 6 7 8 9 10 11 12 13 14 def block1 (): return nn.Sequential(nn.Linear(4 , 8 ), nn.ReLU(), nn.Linear(8 , 4 ), nn.ReLU()) def block2 (): net = nn.Sequential() for i in range (4 ): net.add_module(f'block{i} ' , block1()) return net rgnet = nn.Sequential(block2(), nn.Linear(4 , 1 )) rgnet(X)

tensor([[0.1414],

[0.1414]], grad_fn=<AddmmBackward0>)

Sequential(

(0): Sequential(

(block0): Sequential(

(0): Linear(in_features=4, out_features=8, bias=True)

(1): ReLU()

(2): Linear(in_features=8, out_features=4, bias=True)

(3): ReLU()

)

(block1): Sequential(

(0): Linear(in_features=4, out_features=8, bias=True)

(1): ReLU()

(2): Linear(in_features=8, out_features=4, bias=True)

(3): ReLU()

)

(block2): Sequential(

(0): Linear(in_features=4, out_features=8, bias=True)

(1): ReLU()

(2): Linear(in_features=8, out_features=4, bias=True)

(3): ReLU()

)

(block3): Sequential(

(0): Linear(in_features=4, out_features=8, bias=True)

(1): ReLU()

(2): Linear(in_features=8, out_features=4, bias=True)

(3): ReLU()

)

)

(1): Linear(in_features=4, out_features=1, bias=True)

)

随机初始化

1 2 3 4 5 6 7 8 9 10 def init_normal (m ): if type (m) == nn.Linear: nn.init.normal_(m.weight, mean=0 , std=0.01 ) nn.init.zeros_(m.bias) net.apply(init_normal) net[0 ].weight.data, net[0 ].bias.data

(tensor([[ 3.6479e-03, -1.1540e-02, -7.7268e-04, -5.1345e-03],

[ 7.4597e-03, 1.1359e-02, -6.7565e-03, 8.4972e-03],

[-2.4252e-03, 3.5522e-03, -4.1856e-03, 7.3790e-03],

[-8.8164e-03, -2.4064e-03, 2.4951e-02, -1.1745e-02],

[ 1.0388e-02, -1.9615e-03, 9.7956e-05, -9.9438e-03],

[-1.6299e-03, -5.7079e-03, 2.8373e-04, 9.8193e-03],

[-4.1368e-03, 6.9908e-03, -3.5671e-02, 3.6108e-03],

[ 3.5765e-03, -1.2091e-02, -2.0029e-04, -4.3693e-03]]),

tensor([0., 0., 0., 0., 0., 0., 0., 0.]))

介绍一个比较特殊的初始化,常数初始化,但是神经网络里面不能用常数初始化

1 2 3 4 5 6 7 8 9 10 def init_constant (m ): if type (m) == nn.Linear: nn.init.constant_(m.weight, 1 ) nn.init.zeros_(m.bias) net.apply(init_constant) net[0 ].weight.data, net[0 ].bias

(tensor([[1., 1., 1., 1.],

[1., 1., 1., 1.],

[1., 1., 1., 1.],

[1., 1., 1., 1.],

[1., 1., 1., 1.],

[1., 1., 1., 1.],

[1., 1., 1., 1.],

[1., 1., 1., 1.]]),

Parameter containing:

tensor([0., 0., 0., 0., 0., 0., 0., 0.], requires_grad=True))

apply不仅可以应用一个整的网络,也可以用来对各个层进行操作

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 def xvaier (m ): if type (m) == nn.Linear: nn.init.xavier_uniform_(m.weight) def init_42 (m ): if type (m) == nn.Linear: nn.init.constant_(m.weight, 42 ) net[0 ].apply(xvaier) net[2 ].apply(init_42) print (net[0 ].weight.data)print (net[2 ].weight.data)

tensor([[-0.5463, -0.7031, 0.2726, -0.3747],

[ 0.0067, -0.2665, -0.0956, 0.1011],

[ 0.1168, 0.6005, 0.5389, 0.3871],

[ 0.0057, 0.3475, 0.2790, 0.4906],

[-0.3758, -0.0016, -0.4157, 0.3072],

[ 0.4774, -0.4041, 0.1078, 0.4043],

[ 0.3532, 0.3053, 0.2614, -0.5882],

[ 0.4585, 0.2418, 0.3941, 0.1144]])

tensor([[42., 42., 42., 42., 42., 42., 42., 42.]])

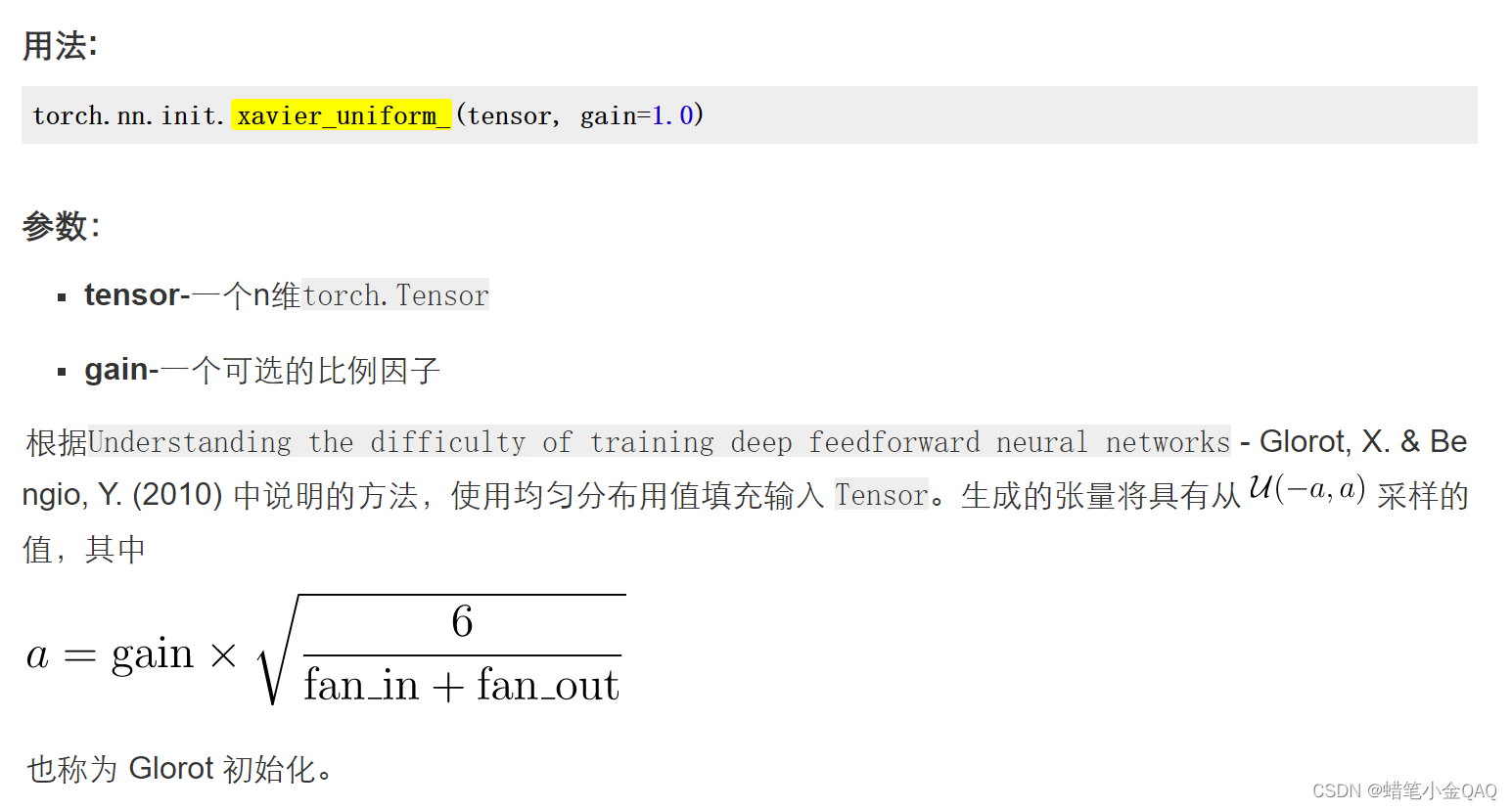

xavier_uniform_() 的用法,说实话看不太懂。。。

1 2 3 4 5 6 7 8 9 10 11 12 13 def my_init (m ): if type (m) == nn.Linear: print ("Init" , *[(name, param.shape) for name, param in m.named_parameters()][0 ]) nn.init.uniform_(m.weight, -10 , 10 ) m.weight.data *= m.weight.data.abs () >= 5 net.apply(my_init) net[0 ].weight

Init weight torch.Size([8, 4])

Init weight torch.Size([1, 8])

Parameter containing:

tensor([[ 8.8280, -5.6789, -6.5591, 0.0000],

[ 6.1000, -7.2595, 7.4734, 0.0000],

[ 0.0000, 7.6965, 0.0000, 0.0000],

[-7.0142, -0.0000, -0.0000, 7.0553],

[ 9.3206, 0.0000, 7.1112, 0.0000],

[-8.2768, -0.0000, 8.3142, 9.4527],

[-0.0000, -6.7602, 0.0000, 7.3447],

[-9.7821, -6.0867, 9.6227, 0.0000]], requires_grad=True)

1 2 3 4 net[0 ].weight.data[:] += 1 net[0 ].weight.data[0 , 0 ] = 42 net[0 ].weight.data[0 ]

tensor([42.0000, -4.6789, -5.5591, 1.0000])

参数绑定,可以将几个层的参数进行绑定,做到一对多同时修改

1 2 3 4 5 6 7 8 9 10 11 12 shared = nn.Linear(8 , 8 ) net = nn.Sequential(nn.Linear(4 , 8 ), nn.ReLU(), shared, nn.ReLU(), shared, nn.ReLU(), nn.Linear(8 , 1 )) net(X) print (net[2 ].weight.data[0 ] == net[4 ].weight.data[0 ])net[2 ].weight.data[0 , 0 ] = 100 print (net[2 ].weight.data[0 ] == net[4 ].weight.data[0 ])

tensor([True, True, True, True, True, True, True, True])

tensor([True, True, True, True, True, True, True, True])

自定义一个层 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 import torchimport torch.nn.functional as Ffrom torch import nnclass CenteredLayer (nn.Module): def __init__ (self ): super ().__init__() def forward (self, X ): return X-X.mean() layer = CenteredLayer() layer(torch.FloatTensor([1 , 2 , 3 , 4 , 5 ]))

tensor([-2., -1., 0., 1., 2.])

将我们的层合并作为组件加到更复杂的模型当中

1 2 3 4 net = nn.Sequential(nn.Linear(8 , 128 ), CenteredLayer()) Y = net(torch.rand(4 , 8 )) Y.mean()

tensor(1.1642e-09, grad_fn=<MeanBackward0>)

想让自己的层自带参数,需要调用parameter类

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 class MyLinear (nn.Module): def __init__ (self, in_units, units ): super ().__init__() self.weight = nn.Parameter(torch.randn(in_units, units)) self.bias = nn.Parameter(torch.randn(units,)) def forward (self, X ): Linear = torch.matmul(X, self.weight.data)+self.bias.data return F.relu(Linear) dense = MyLinear(5 , 3 ) dense.weight

Parameter containing:

tensor([[-0.3888, 0.7929, 0.0935],

[ 1.3672, -2.3833, 2.3415],

[-0.5297, 0.5334, 0.0868],

[ 1.3455, -0.5648, 0.5850],

[-0.0486, 1.0883, -0.6080]], requires_grad=True)

tensor([[1.1443, 0.0000, 1.3325],

[0.0000, 0.0000, 0.2987]])

用自己定义的层来构建网络

1 2 net = nn.Sequential(MyLinear(64 , 8 ), MyLinear(8 , 1 )) net(torch.rand(2 , 64 ))

tensor([[5.9957],

[0.0000]])

读写文件 1 2 3 4 import torchfrom torch import nnfrom torch.nn import functional as F

1 2 3 4 5 6 x = torch.arange(4 ) torch.save(x, 'x-file' ) x2 = torch.load('x-file' ) x2

tensor([0, 1, 2, 3])

存一个张量列表,将他们读回内存

1 2 3 4 5 y = torch.zeros(4 ) torch.save([x, y], 'x-files' ) x2, y2 = torch.load('x-files' ) (x2, y2)

(tensor([0, 1, 2, 3]), tensor([0., 0., 0., 0.]))

存了一个字典,以字符串作为索引,和字典内部的东西一一映射

1 2 3 4 5 mydict = {'x' : x, 'y' : y} torch.save(mydict, 'mydict' ) mydict2 = torch.load('mydict' ) mydict2

{'x': tensor([0, 1, 2, 3]), 'y': tensor([0., 0., 0., 0.])}

加载和保存参数

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 from turtle import cloneclass MLP (nn.Module): def __init__ (self ) -> None : super ().__init__() self.hidden = nn.Linear(20 , 256 ) self.output = nn.Linear(256 , 10 ) def forward (self, x ): return self.output(F.relu(self.hidden(x))) net = MLP() X = torch.randn(size=(2 , 20 )) Y = net(X) torch.save(net.state_dict(), 'mlp.params' )

实例化了一个MLP的备份,直接读取其中的参数

1 2 3 4 5 clone = MLP() clone.load_state_dict(torch.load("mlp.params" )) clone.eval ()

MLP(

(hidden): Linear(in_features=20, out_features=256, bias=True)

(output): Linear(in_features=256, out_features=10, bias=True)

)

我们来验证一下新克隆的层和之前的层是否一样

1 2 Y_clone=clone(X) Y_clone==Y

tensor([[True, True, True, True, True, True, True, True, True, True],

[True, True, True, True, True, True, True, True, True, True]])

可以看出来是一摸一样的,我们等于用克隆出来的层去加载了原来的参数,从而使参数相同,输出相同