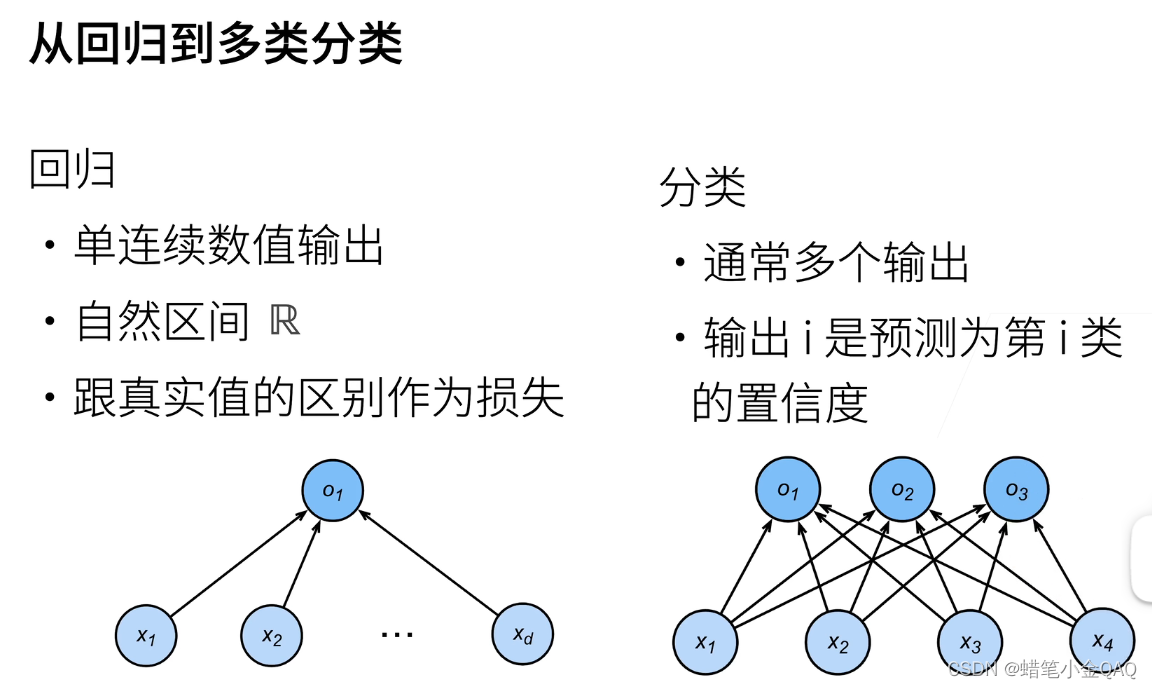

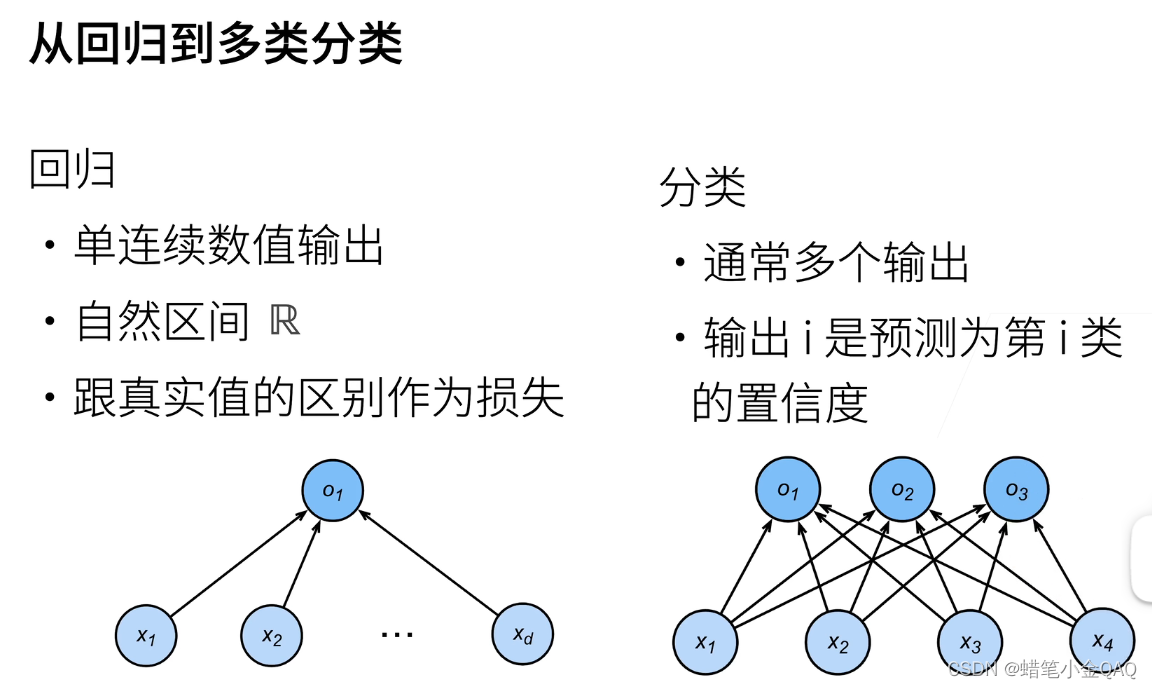

Softmax回归

引子

回归可以用于预测多少的问题。 比如预测房屋被售出价格,或者棒球队可能获得的胜场数,又或者患者住院的天数。

事实上,我们也对分类问题感兴趣:不是问“多少”,而是问“哪一个”!

图像分类数据集

1

2

3

4

5

6

7

8

| from d2l import torch as d2l

from torch.utils import data

from torchvision import transforms

import torchvision

import torch

%matplotlib inline

d2l.use_svg_display()

|

下载并读取数据集

1

2

3

4

5

6

7

|

trans = transforms.ToTensor()

mnist_train = torchvision.datasets.FashionMNIST(

root="./data", train=True, transform=trans, download=True)

mnist_test = torchvision.datasets.FashionMNIST(

root="./data", train=False, transform=trans, download=True)

len(mnist_train), len(mnist_test)

|

(60000, 10000)

制定标签

1

2

3

4

5

| def get_fashion_mnist_labels(labels):

text_labels = ['t-shirt', 'trouser', 'pullover', 'dress', 'coat',

'sandal', 'shirt', 'sneaker', 'bag', 'ankle boot']

return [text_labels[int(i)] for i in labels]

|

可视化

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

| def show_images(imgs, num_rows, num_cols, titles=None, scale=1.5):

"""绘制图像列表"""

figsize = (num_cols * scale, num_rows * scale)

_, axes = d2l.plt.subplots(num_rows, num_cols, figsize=figsize)

axes = axes.flatten()

for i, (ax, img) in enumerate(zip(axes, imgs)):

if torch.is_tensor(img):

ax.imshow(img.numpy())

else:

ax.imshow(img)

ax.axes.get_xaxis().set_visible(False)

ax.axes.get_yaxis().set_visible(False)

if titles:

ax.set_title(titles[i])

return axes

|

1

2

| X, y = next(iter(data.DataLoader(mnist_train, batch_size=18)))

show_images(X.reshape(18, 28, 28), 2, 9, titles=get_fashion_mnist_labels(y))

|

array([<AxesSubplot:title={'center':'ankle boot'}>,

<AxesSubplot:title={'center':'t-shirt'}>,

<AxesSubplot:title={'center':'t-shirt'}>,

<AxesSubplot:title={'center':'dress'}>,

<AxesSubplot:title={'center':'t-shirt'}>,

<AxesSubplot:title={'center':'pullover'}>,

<AxesSubplot:title={'center':'sneaker'}>,

<AxesSubplot:title={'center':'pullover'}>,

<AxesSubplot:title={'center':'sandal'}>,

<AxesSubplot:title={'center':'sandal'}>,

<AxesSubplot:title={'center':'t-shirt'}>,

<AxesSubplot:title={'center':'ankle boot'}>,

<AxesSubplot:title={'center':'sandal'}>,

<AxesSubplot:title={'center':'sandal'}>,

<AxesSubplot:title={'center':'sneaker'}>,

<AxesSubplot:title={'center':'ankle boot'}>,

<AxesSubplot:title={'center':'trouser'}>,

<AxesSubplot:title={'center':'t-shirt'}>], dtype=object)

小批量读取

1

2

3

4

5

6

7

8

9

| batch_size = 256

def get_dataloader_workers():

return 4

train_iter = data.DataLoader(mnist_train, batch_size, shuffle=True,

num_workers=get_dataloader_workers())

|

读取所需要的时间

1

2

3

4

| timer = d2l.Timer()

for X, y in train_iter:

continue

f'{timer.stop():.2f} sec'

|

'3.66 sec'

整合组件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

| def load_data_fashion_mnist(batch_size, resize=None):

"""下载Fashion-MNIST数据集,然后将其加载到内存中"""

trans = [transforms.ToTensor()]

if resize:

trans.insert(0, transforms.Resize(resize))

trans = transforms.Compose(trans)

mnist_train = torchvision.datasets.FashionMNIST(

root="./data", train=True, transform=trans, download=True)

mnist_test = torchvision.datasets.FashionMNIST(

root="./data", train=False, transform=trans, download=True)

return (data.DataLoader(mnist_train, batch_size, shuffle=True,

num_workers=get_dataloader_workers()),

data.DataLoader(mnist_test, batch_size, shuffle=False,

num_workers=get_dataloader_workers()))

|

调整图像大小

1

2

3

4

| train_iter, test_iter = load_data_fashion_mnist(32, resize=64)

for X, y in train_iter:

print(X.shape, X.dtype, y.shape, y.dtype)

break

|

torch.Size([32, 1, 64, 64]) torch.float32 torch.Size([32]) torch.int64

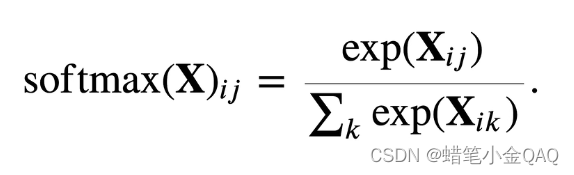

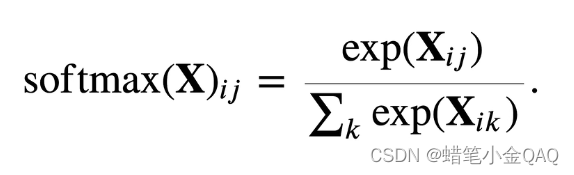

softmax——从零开始实现

softmax及其回归机制,讲的很好

1

2

3

4

5

6

| import torch

from IPython import display

from d2l import torch as d2l

batch_size =256

train_iter,test_iter=load_data_fashion_mnist(batch_size)

|

1

2

3

4

5

6

7

8

| num_inputs = 784

num_outputs = 10

W = torch.normal(0, 0.01, size=(num_inputs, num_outputs), requires_grad=True)

b = torch.zeros(num_outputs, requires_grad=True)

|

1

2

3

4

| def softmax(X):

X_exp = torch.exp(X)

partition = X_exp.sum(1, keepdim=True)

return X_exp/partition

|

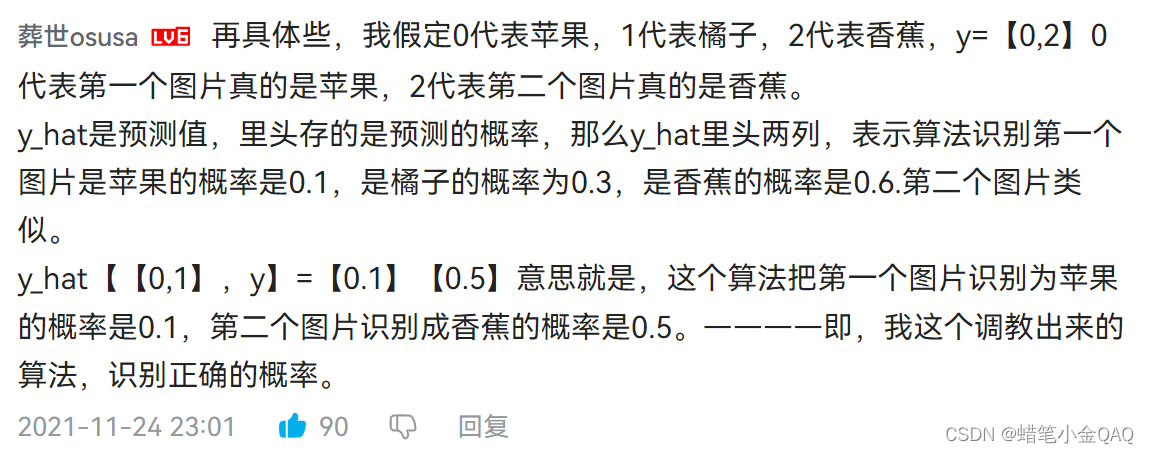

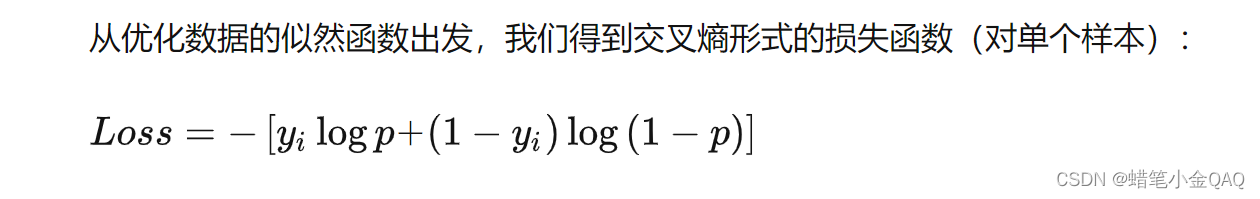

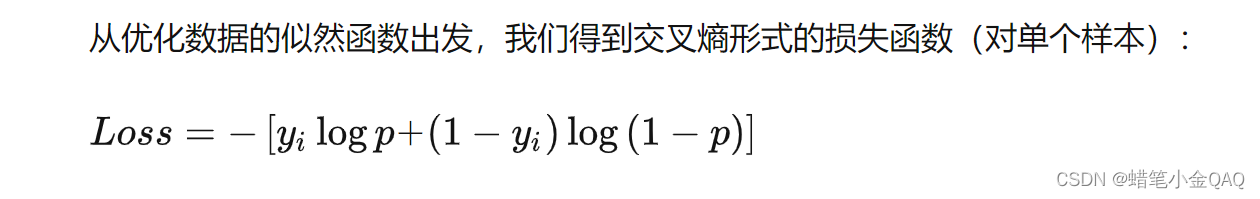

softmax回归理解

多元分类器交叉熵公式

1

2

| def net(X):

return softmax(torch.matmul(X.reshape((-1, W.shape[0])), W)+b)

|

测试我们的softmax:

对于任何随机输入,我们将每个元素变成一个非负数。 此外,依据概率原理,每行总和为1。

1

2

3

| X = torch.normal(0, 1, (2, 5))

X_prob = softmax(X)

X_prob, X_prob.sum(1)

|

(tensor([[0.2772, 0.0864, 0.1937, 0.0683, 0.3745],

[0.0335, 0.2456, 0.1310, 0.2208, 0.3691]]),

tensor([1.0000, 1.0000]))

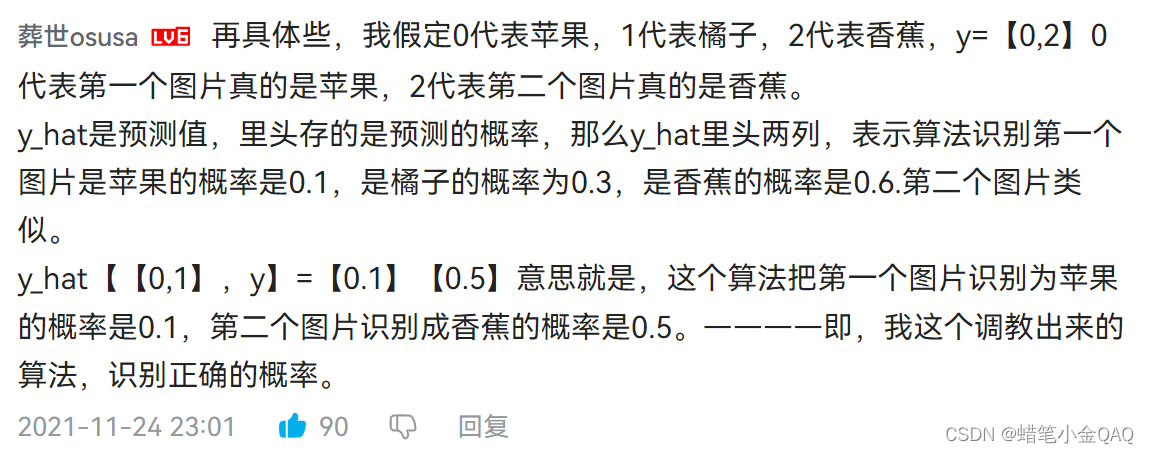

1

2

3

| y = torch.tensor([0, 2])

y_hat = torch.tensor([[0.1, 0.3, 0.6], [0.3, 0.2, 0.5]])

y_hat[[0, 1], y]

|

tensor([0.1000, 0.5000])

损失函数

1

2

3

4

| def cross_entropy(y_hat, y):

return - torch.log(y_hat[range(len(y_hat)), y])

cross_entropy(y_hat, y)

|

tensor([2.3026, 0.6931])

1

2

3

4

5

6

7

8

|

def accuracy(y_hat, y):

if len(y_hat.shape) > 1 and y_hat.shape[1] > 1:

y_hat = y_hat.argmax(axis=1)

cmp = y_hat.type(y.dtype) == y

return float(cmp.type(y.dtype).sum())

accuracy(y_hat,y)/len(y)

|

0.5

评估任意模型net的准确率

1

2

3

4

5

6

7

8

9

| def evaluate_accuracy(net, data_iter):

"""计算在指定数据集上模型的精度"""

if isinstance(net, torch.nn.Module):

net.eval()

metric = Accumulator(2)

with torch.no_grad():

for X, y in data_iter:

metric.add(accuracy(net(X), y), y.numel())

return metric[0] / metric[1]

|

后面的实现不会了。。。前方的区域以后再来探索吧

softmax——pytorch框架实现

1

2

3

4

5

6

| import torch

from torch import nn

from d2l import torch as d2l

batch_size = 256

train_iter, test_iter = load_data_fashion_mnist(batch_size)

|

1

2

3

4

|

X = torch.tensor([[1.0, 2.0, 3.0], [4.0, 5.0, 6.0]])

X.sum(0, keepdim=True), X.sum(1, keepdim=True)

|

(tensor([[5., 7., 9.]]),

tensor([[ 6.],

[15.]]))

softmax

Sequential

normal_

1

2

3

4

5

6

7

8

9

10

11

12

13

|

net = nn.Sequential(nn.Flatten(), nn.Linear(784, 10))

def init_weight(m):

if type(m) == nn.Linear:

nn.init.normal_(m.weight, std=0.01)

net.apply(init_weight)

|

Sequential(

(0): Flatten(start_dim=1, end_dim=-1)

(1): Linear(in_features=784, out_features=10, bias=True)

)

1

| loss = nn.CrossEntropyLoss(reduction='none')

|

1

| trainer =torch.optim.SGD(net.parameters(),lr=0.1)

|

1

2

| num_epochs=10

d2l.train_ch3(net,train_iter,test_iter,loss,num_epochs,trainer)

|

参考李沐老师的《动手学深度学习》

softmax好难,学了好久才大概懂一点点,我是菜鸟